INTRODUCTION

OSINT (Open Source Intelligence) refers to the collection and analysis of information from PAI (Publicly Available Information) sources to produce actionable intelligence. At the beginning of 20th century, it relied on analog sources such as newspapers, radio broadcasts, and government publications. At the end of that century, the explosion of websites, online forums, and searchable databases, enabled analysts to access global information rapidly and efficiently. Search engines and specialized online tools further streamlined the process, allowing for targeted and scalable intelligence gathering.

The integration of AI (artificial intelligence) and ML (machine learning) has automated the extraction, processing, and analysis of massive volumes of data from diverse sources, including social media and geospatial platforms. AI-driven tools can sift through billions of data points, identify patterns, and generate predictive insights far beyond human capacity.

The integration of OSINT and AI offers powerful capabilities and can address complex real-world challenges by enhancing the speed, accuracy, and reliability of intelligence gathering and analysis but also raises significant concerns about reliability and validity.

- AI Hallucinations: AI can generate information that sounds credible but is entirely fabricated. This risk makes rigorous validation essential.

- Source Transparency: AI often does not provide clear citations or traceable sources, complicating the source validation.

Both OSINT analysts and AI users must maintain a critical, questioning approach, applying verification principles to every piece of information.

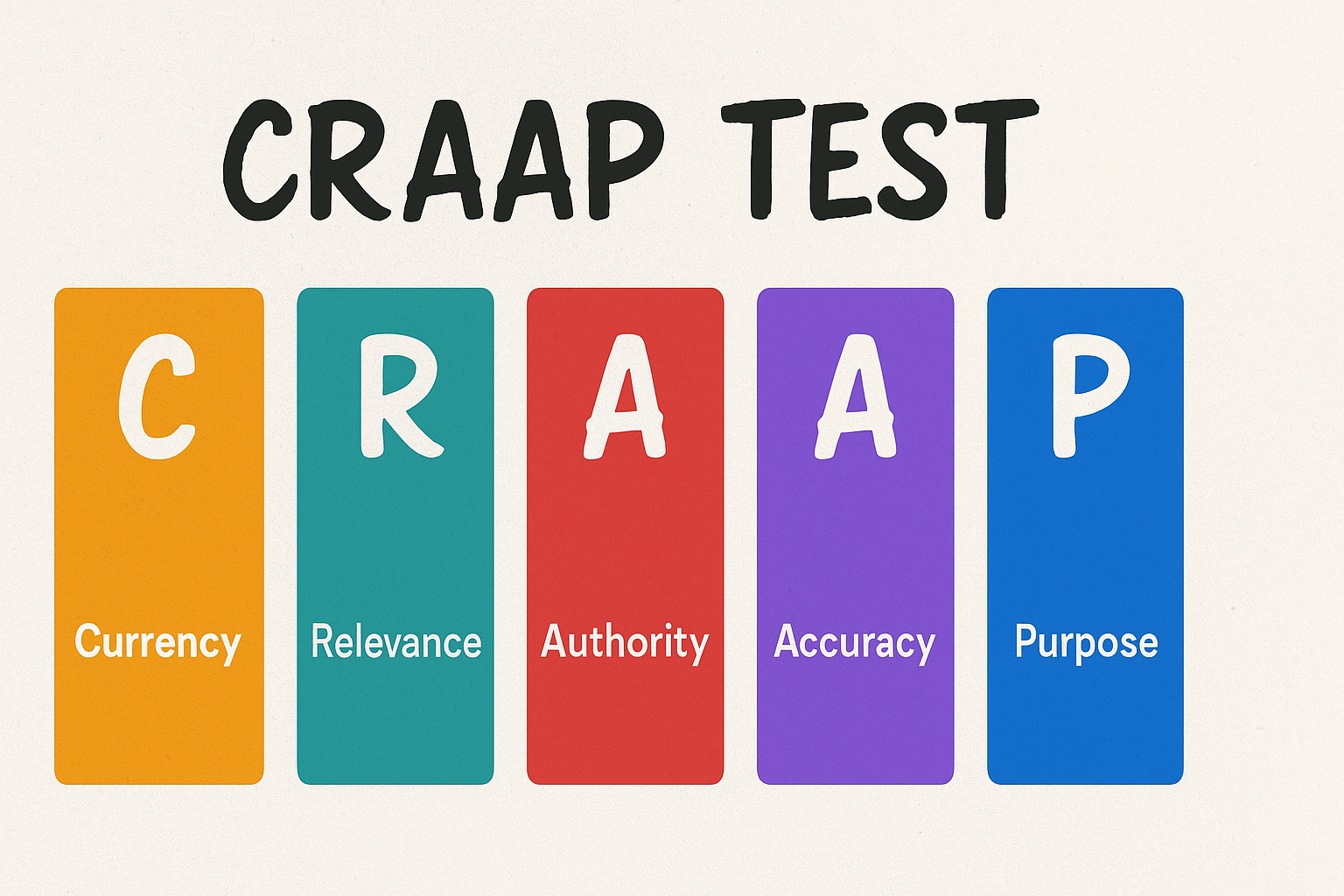

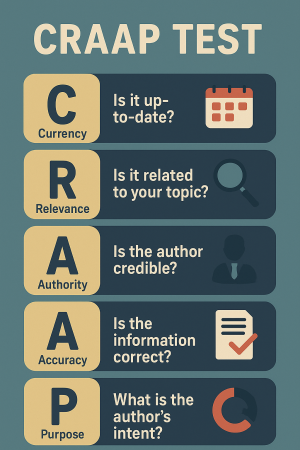

THE CRAAP TEST

The CRAAP test, a widely used framework for evaluating information, is a practical and systematic framework that helps analysts assess the reliability of information by guiding them through five key criteria: Currency, Relevance, Authority, Accuracy, and Purpose. The test can be effectively applied to both OSINT and AI-generated outputs to assess their trustworthiness.

Here is how each component specifically supports the evaluation of AI-generated OSINT:

Here is how each component specifically supports the evaluation of AI-generated OSINT:

- Currency: Timeliness is critical, especially for rapidly changing situations (e.g., geopolitical events). Outdated information may lead to incorrect conclusions. The CRAAP test prompts analysts to check whether the AI’s information is up to date, which is crucial since many AI models have a knowledge cutoff and may not reflect recent developments. This step helps avoid using outdated or obsolete intelligence in decision-making.

- Relevance: Information should directly address the intelligence question or operational need. Relevance encourages analysts to determine if the AI-generated content directly addresses their research question. This ensures that only pertinent and actionable information is used, filtering out irrelevant or tangential data. AI-generated content can sometimes be off-topic or overly generic.

- Authority: Information can come from a wide range of sources, not all of which are authoritative. The source’s expertise, reputation, and biases need to be examined. The test asks users to scrutinize the credibility of the sources or authors referenced by the AI. Since AI tools may generate information without clear attribution, this step pushes analysts to verify the expertise and legitimacy of cited organizations or individuals, reducing the risk of relying on untrustworthy sources. AI does not generate information from its own authority; it synthesizes from its training data. AI outputs require cross-verifying with authoritative, external sources.

- Accuracy: OSINT is prone to misinformation, especially from social media or unverified outlets. AI may produce “hallucinations,” plausible but false or misleading information. By requiring cross-verification of facts and checking for supporting evidence, the CRAAP test helps detect AI hallucinations, misinformation, or unsupported claims. Analysts are encouraged to corroborate AI outputs with independent, authoritative sources to ensure factual correctness.

- Purpose: Assess the intent behind the information. It is necessary to be aware of sources with clear agendas or biases. The framework guides analysts to consider the intent behind the information. This is important for AI-generated content, which may inadvertently reflect biases from its training data or present information in a way that is meant to persuade or entertain rather than inform objectively. AI outputs may reflect biases present in training data or be influenced by the prompt. Content needs verifying whether it is neutral or slanted.

The CRAAP test is a practical, structured method for validating both OSINT and AI-generated content. By systematically checking currency, relevance, authority, accuracy, and purpose, analysts and researchers can systematically identify and mitigate the limitations of AI-based OSINT tools-such as outdated knowledge, lack of source transparency, bias, and factual errors-thereby improving the overall trustworthiness and utility of the intelligence they produce. This approach is vital in times susceptible to misinformation, bias, and manipulation.

LIMITATIONS

The CRAAP test is a valuable starting point for evaluating AI-generated OSINT data, but it has several limitations when applied to this context:

- Opaque Source Attribution: AI-generated outputs often do not provide clear, traceable sources or may fabricate references entirely, making it difficult to assess Authority and Accuracy using traditional CRAAP criteria. Analysts may struggle to verify the origin or credibility of the information presented.

- Outdated or Incomplete Information: Many AI models have a fixed knowledge cutoff and do not access real-time data, which can lead to outdated or incomplete outputs. This undermines the Currency criterion, as it may not be clear how current the information actually is.

- Surface-Level Relevance: AI tools often generate generic or “middle-of-the-road” responses, potentially missing nuanced or alternative viewpoints. This can result in outputs that are only superficially relevant or fail to address the specific needs of the analyst.

- Bias and Hallucination: AI models can reflect biases present in their training data and may generate plausible sounding but false or misleading information (“hallucinations”). The CRAAP test does not inherently detect these issues, so analysts must remain vigilant for bias and misinformation even when using the framework.

- Lack of Context and Expertise: AI-generated content may lack the depth, context, or domain expertise found in peer-reviewed or specialist sources. The Authority and Purpose criteria are harder to assess because the “author” is an algorithm trained on a vast, uncurated dataset.

- Verification Burden on the User: The CRAAP test relies on the analyst’s ability to independently verify facts, check for supporting evidence, and corroborate claims. Given that AI outputs may not provide verifiable references, this places a significant burden on the user to conduct additional research.

- Potential for Overconfidence: Because AI-generated text often sounds fluent and authoritative, there is a risk that users may overestimate its reliability and skip critical evaluation steps.

In summary, while the CRAAP test provides a useful checklist, its effectiveness is limited by the unique challenges of AI-generated OSINT, especially the lack of transparent sourcing, potential for hallucination, and the need for constant cross-verification. Analysts should supplement the CRAAP test with additional validation strategies and remain cautious about the limitations of AI tools.